I’ll be the first to admit that I don’t know a lot about and have never given much thought to the goings on in Oklahoma. Back in 1982, Lou Gossett Jr. told me there were only two things that come out of Oklahoma, but that was about a decade before Garth Brooks and another decade before Carrie Underwood. A few years back, I learned from Vince Gill that in the 1960s Oklahoma City, of all places, was home to the Boston Bruins farm team that developed the players who formed the core of the 2x Stanley Cup Champion Big Bad Bruins. And I know that sometimes the surrey with the fringe on top carries your career trajectory full circle from our nation’s capital to little old Rhode Island before settling back down in Tulsa.

But that’s about it.

With regard to state assessment, for the most part Oklahoma has gone quietly about its business, the only waves coming from the state being the wavin’ wheat which can sure smell sweet when the wind comes sweepin’ down the plain.

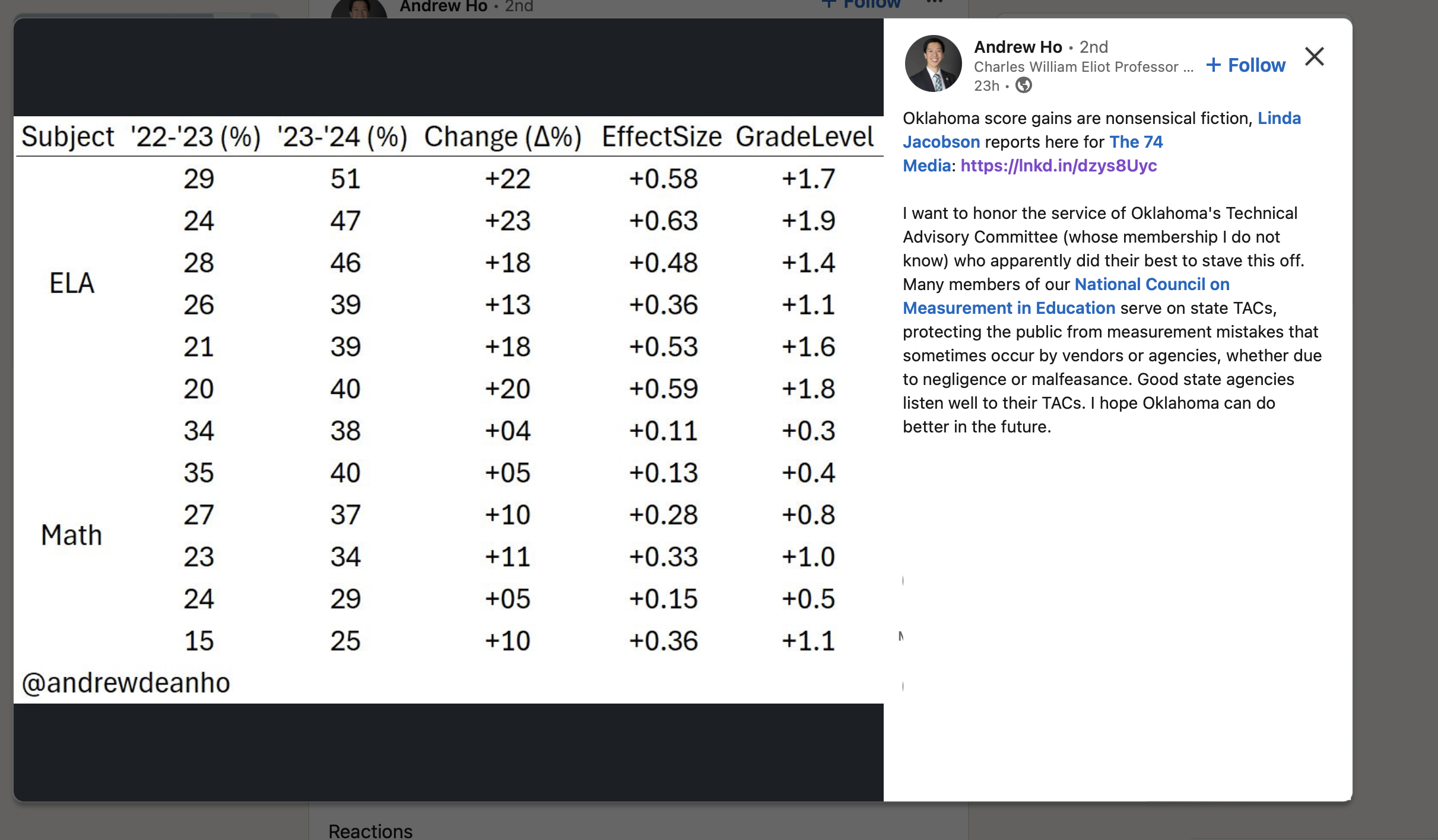

What then could Oklahoma possibly have done to set the assessment and measurement worlds atwitter this week from Kansas City to Cambridge to California and beyond, to elicit the following post from NCME president Andrew Ho:

Turns out, not much. Well, definitely not nearly enough.

What we’ve got here is failure to communicate

In a nutshell, this summer Oklahoma reset the achievement standards on their state assessment, the result being a pretty large increase in the number of students whose performance was classified as Proficient.

How large an increase? Larger than Oklahoma’s world’s largest bottle of hair tonic; and apparently just as hard to swallow. [but seriously kids, don’t drink hair tonic]

Now, resetting achievement standards is nothing new. Oklahoma apparently revisits their content and achievement standards every 6-7 years – which actually qualifies as a damned good, if not best, practice in state assessment.

Also not new, is the outcome in which the “adjusted” standards result in whale size increases in the percentage of kids classified as Proficient. In fact, back in the early Naughts, when Rod Paige and Margaret Spellings were roaming the halls of the LBJ building, the phenomenon was unfairly labelled as states engaged in a “race to the bottom.” A few years later, of course, Arne Duncan and Barack Obama countered the race to the bottom with, the equally ill-named, as it turned out, Race to The Top.

To give just a little NAEP context (because we love our NAEP context), the new achievement cut scores in Oklahoma appear to be much closer to the NAEP Basic threshold while the old cuts were closer to the ballpark of NAEP Proficient. In fact in 2019, Oklahoma appeared to be one of only a handful of state assessment programs with cut scores “close to” NAEP Proficient in reading and a couple of handfuls in mathematics, the vast majority coming in closer NAEP Basic.

So, why the kerfuffle?

What Oklahoma didn’t do very well, an understatement based on all that has been reported, is communicate with stakeholders about the change in achievement standards, failing to convey somewhat critical information such as why the change was made, how to interpret the new standards, how NOT to compare the 2024 and 2023 results, or how this will affect school accountability.

Oops.

To be fair, based on a cursory review of the OK department website:

- The department had announced throughout the spring that the spring 2024 tests would be based on revised content standards (2021 and 2022) and that standard setting would take place this summer.

- It also appears that although results have been provided to districts for their review (another good practice), there has not yet been an official release of the 2024 state assessment results – a distinction without a difference, sure.

Given that 2024 was likely not the first rodeo for a lot of district assessment people, we can assume that most of them knew that some change was coming. They just needed a lot more help in understanding how to interpret the new results, a conclusion reflected in headlines and comments presented in some of the media coverage last week.

So, how did Oklahoma state assessment results become a national story, and why am I writing about it this week?

Well, it’s complicated (unlike the change in achievement standards), but it seems to me that there are two main reasons:

The first is that although Oklahoma’s state assessment program has flown under the radar, the same cannot be said for its state chief, who by his own doing finds himself smack dab in the middle of a culture war or holy war, or some combination of the two, and that might be the least of his concerns. At this point, therefore, anything the chief and department do are subject to heightened scrutiny and also subject to people taking any opportunity to pile on (perhaps rightfully so).

The second reason, …

We’re Just A Field That Can’t Say No

Self-awareness time here folks: In our role as assessment specialists, we don’t have a long history of saying no. In a bygone era, one might characterize us as kind of easy; eager to please and loose with regard to Standards. And those are the descriptions that would come from our friends. I don’t have to go into what our enemies think of us.

In part, we simply are who we are; as a group, introverts who don’t like confrontation. In part, we are the way that we are because of the nature of our relationships with those engaged in state assessment.

Most of us interacting with states are doing so in our capacity as employees of a testing company. It’s a business. The relationship is client-vendor or client-contractor, and Rule #1 always has been and always will be, do what you can to make the client happy.

Does that include doing things that are clearly inappropriate? Of course not. But there is surprisingly little that is black-and-white and an awful lot that is gray, foggy, and blurry in state testing – otherwise we still wouldn’t be leaning so heavily on multiple-choice items. And there are lots of “have to dos” that you, well, have to do because they are written in the law.

A smaller number of us are employed within a state department of education, surviving day-to-day in the belly of the beast. We feel firsthand the effects of the feud between the governor and state chief. We stand in the wake of the slings-and-arrows hurled at the state chief or our assessment director, and we catch the flak from those on the other end of phones that never stop ringing. We are there to implement policy and laws. As I would tell my staff when they complained about this law or that regulation, “ours is not to question why…”

We make our 30-second pitches to the chief, advising her on what she can and cannot say with confidence about the state test results. We try to figure out a way to allow him to say what he needs to say. We draft reports, considering tone, carefully selecting each word, anticipating its impact. We warn the superintendent of the small school district that the large gain that they saw this year might just as likely be a loss next year, the Kane & Staiger (2002) arrowhead chart embedded deep within our brains. Sometimes they listen to us, other times, it almost comes to blows when a Deputy Commissioner accuses us of not caring about teachers and kids. Such is life.

Then there is the even smaller group of us, the cohort who serve as technical advisors to a state, each of us with experience and/or expertise in one or more aspects of state assessment. We may meet a few times per year, less frequently now than in the past. We hear the issues/problems as they are presented to us, read between the lines, and probe for the important parts that have not been mentioned. If a state is considering options, we offer frank advice on the pros, cons, and consequences of each. (Those of us in the room that day will forever remember the phrase, “psychometrically, it reeks!” But when a state has selected its option, we advise them on how best to implement it to maximize technical quality and minimize negative consequences (intended, unintended, and unanticipated).

In each of those roles, we help to “craft the narrative” for the media so that they can better convey the state’s message to the public. In each role, we engage with the media, but we know our limitations when dealing with them; we’ve all heard some things you can’t print in papers. And woe to the testing company president who decides to do an interview with the local press and says, “we told them not to use the test as a graduation requirement.”

And then there is that post that got me started down this road. The one from NCME via Andrew Ho; NCME because as president it’s not possible to separate the words of the man and the organization. In recent years, NCME has tried to be more proactive in the world of state assessment, promoting good uses and calling out bad. But with limited information it’s tough to know when to engage or how to respond when EdWeek or The 74 calls on a deadline. You jump in too soon and run the risk of misreading the situation, fanning flames, or making a federal case out of a molehill. You wait too long or don’t engage at all when you should have and … I’m sure that you can conjure up your own lists of examples on both sides of that equation.

I’ve always looked forward to reading Andrew’s posts on Twitter (X) and LinkedIn; cogent, concise thoughts usually accompanied by an informative graphic which I have come to refer to as a “Ho-table” – rhymes with notable, quotable, and totable. I hope that my writing can meet the same standard, but I don’t envy the position he is in now in this fast-paced, react now, ask questions later, social media world. He’s walking a tightrope without a net.

I don’t envy him, but I wish him well.

And I wish Oklahoma, its assessment specialists, its assessment contractor, its TAC, and even its chief well. And I give them all the benefit of the doubt, until proven otherwise.

And whenever I think about offering solicited or unsolicited advice to a state on its assessment program, I try to remember the words of one of the most famous sons of Oklahoma, Will Rogers:

There is nothing as easy as denouncing. It don’t take much to see that something is wrong but it does take some eyesight to see what will put it right again.

OK

Header image by Brad Mardis from Pixabay

You must be logged in to post a comment.