I am in a state.

It’s a state that I find myself in every two years when like clockwork NAEP Reading and Mathematics test results are released. (Well, clockwork like the 12-year-old clock from Target that runs on a single AA battery and hangs on an outer wall in our poorly insulated family room.). On the one hand, there is my natural inclination to downplay the relevance and importance of NAEP results, no doubt a byproduct of my longstanding and ongoing love/hate relationship with our nation’s report card. On the other hand, there are the people in the field for whom I have the utmost respect and admiration touting the importance of NAEP and the good that can come from the information that we can glean from its results.

What’s an aging state assessment specialist and semi-retired consultant with a blog to think and to do?

The “what to think” part is a bit tricky, but perhaps I can work it out via the “what to do” task of writing a blog post.

Let’s try to get to the root of my complicated feelings toward NAEP. They predate my NAGB nomination being rejected out of hand, so we can eliminate that as the cause. It certainly has nothing to do with the top-notch NAGB staff; and it probably has little to do with the test itself because after all, who really knows all that much about the test.

If I’m being really honest with myself, at the core, there’s definitely an unhealthy amount of envy or professional jealously that may have started when we were taken to Court (and lost) for trying to delay the reporting of state test results for a couple of days while we finished writing an interpretive report. NAEP results, in contrast, are released whenever they damn well please.

However, over the course of a 30-year career in state assessment, the beginning of which coincided with the introduction of state NAEP, there are two aspects of NAEP which have repeatedly left me shaking my head and asking why not us?

First, there is NAEP’s uncanny ability to just keep on going no matter what seems to go wrong. As a veteran of many long-defunct and often short-lived state assessment programs NAEP’s staying power has been amazing. Kudos to Lesley Muldoon, whose charge was to help NAEP “maintain its relevance in a changing education landscape.”

Second, there’ s that NAEP attitude. You know what I mean. That never gets its hands dirty, always operating above the fray, too cool for school swagger that 99% of us involved in state assessment and psychometrics could never even dream of pulling off.

NAEP Can Survive

If there’s one thing that we have learned about NAEP over the years it is that, like country boys and cockroaches, NAEP can survive.

In the late 1980s when the push for a national assessment that emerged from the Charlottesville Summit seemed to threaten NAEP, state NAEP was hastily introduced as a poor man’s alternative to a national test, but one that carried the imprimatur of NAEP – the gold standard.

After using the better part of the 1990s to work out kinks like how to handle accommodations and what “scale” to use, state NAEP seemed ready to rock and roll. But then along came NCLB, with its exponential increase state testing threatening states’ voluntary participation in NAEP. No problem, state participation in NAEP was now mandatory (although student participation remains voluntary).

Fast forward a dozen years and it appears that the national assessment consortia will make state NAEP obsolete. Was NAEP worried? Did it even blink? No, of course not; and we all know how that turned out.

Through fundamentally flawed achievement levels, a reading anomaly, an awkward transition to computer-based testing, the ill-conceived decision to drop the fabled pantyhose chart in favor of a funky online map, and the gestation period of an elephant* to produce a fairly limited and mundane set of test results, NAEP survives.

Too cool to care

Think about it. What other assessment program could declare on its website for close to 20 years that its achievement levels had been deemed fundamentally flawed and still be reporting after 30 years that those achievement levels were being used on a trial basis.

And while most of us have trouble claiming with a straight face to have maintained a scale across three or four test administrations, NAEP has confidently stood behind its scale for three or four decades.

Ah, the NAEP scale. What to make of that scale that looks like a vertical scale and quacks like a vertical scale, but more likely than not really isn’t a vertical scale, but there a 30-year-old ex post facto study that shows that it’s close enough and given the point above about the scale, does it really matter anyway.

When NAEP became mandatory, many were calling for NAEP to be used formally to audit state assessments and achievement standards. NAEP’s response, that’s OK, we’re good; but hey if you want to do that on your own, be our guest.

Finally, there’s the Common Core State Standards.

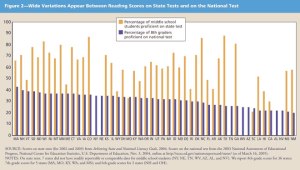

It is not really far-fetched at all to claim that NAEP is responsible for the CCSS. History is replete with images that sparked movements, caused turmoil, or otherwise jolted the status quo; and in the case of education reform, this graphic was the image that shook the state assessment world and led directly to the CCSS.

When 44 states adopted the CCSS standards out of the gate, for all intents and purposes we now had a national (but not federal, of course) set of achievement standards. So, did NAEP join in and adopt the standards it inspired? No, of course not.

NAEP maintains its own Framework, its own definition of Proficient, and its own vision of college-and-career readiness.

Cool and cold.

You have to respect that.

But as we eagerly await NAEP Day and the imminent release of the 2024 NAEP Reading and Mathematics results I keep coming back to one question:

Who are these results for?

Our Super Bowl ®

NAEP Day has been described as our version of the NFL’s Super Bowl and the more I ponder that comparison, the better it fits.

The Super Bowl long ago transcended football and diehard football fans to become a quasi national holiday, the largest at-home gatherings day of the year. Sure, a football champion is crowned, but for years, commercials and the halftime show have garnered more attention than the game.

Most significantly, Super Sunday ropes in the casual observer, shining a spotlight on football for people who couldn’t care less about football the other 364 days of the year.

In many ways, that’s NAEP in a nutshell.

NAEP is not intended for me.

NAEP is not for those of us toiling in state assessment day-after-day, year-after-year. If we are doing our jobs well, NAEP is going to tell us nothing about student achievement in our state that we didn’t already know – in the same way that our state tests shouldn’t be telling local school administrators and teachers anything about their schools and students that they didn’t already know. (True, NAEP serves an auditing function in places where things aren’t going well, but that’s a whole different conversation and set of problems.)

No, NAEP’s “purpose” is to shake up and wake up the casual observers who also happen to be in positions to shape policy and provide funding.

With its bright spotlight, NAEP provides information that allows those playing the long game of Education Reform to make their case to those shaken up movers and shakers. It may allow state-based reformers to show that their efforts are paying off or to make the case that they need more support and commitment to keep up with the Jones’ and Mississippi’s. It may allow national reformers to make their case that we need to repair and rethink the education infrastructure.

NAEP provides fodder for academicians, statisticians, econometricians, and perhaps even a few psychometricians to rework, recondition, reinterpret, and recast its scores to craft any number of narratives.

NAEP 2024 results that are somewhat better than 2022 but still below 2019 will unleash countless analyses to determine whether we are witnessing a recovery or a continuation of a decline that began long before the pandemic. Or both.

And that, for the most part, is all good and as it should be.

With all due respect to those who argue that educators should be paying close attention to the NAEP results next week, that’s just poppycock. Any extra time educators have not focused on teaching the kids in front of them will be much better spent selecting squares in their school and office Super Bowl pools than devoting a scintilla of energy to NAEP scores.

NAEP generates discussion and policy at the national level.

State tests generate discussion and policy at the state level.

There are so many other tests and types of assessment that provide information to generation discussion and action at the district, school, and classroom levels, including interim assessment.

So, for a few days next week and perhaps for another blog post, I will set aside my petty jealousies and appreciate NAEP for what it is and the role that it plays in the assessment ecosystem.

Happy NAEP Day!

Header Image by Darwin Laganzon from Pixabay

*Exaggeration for effect.

You must be logged in to post a comment.